- Home

- Microsoft IIS

- IIS Support Blog

- Troubleshooting IIS apps: one structured approach

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We have a problem with our IIS application. Immediately seeing an error page with the cause is the lucky scenario. Sometimes we need to work harder to figure out what’s happening. Having a plan for investigation and knowing how things work together at a high level helps isolating where the cause might be.

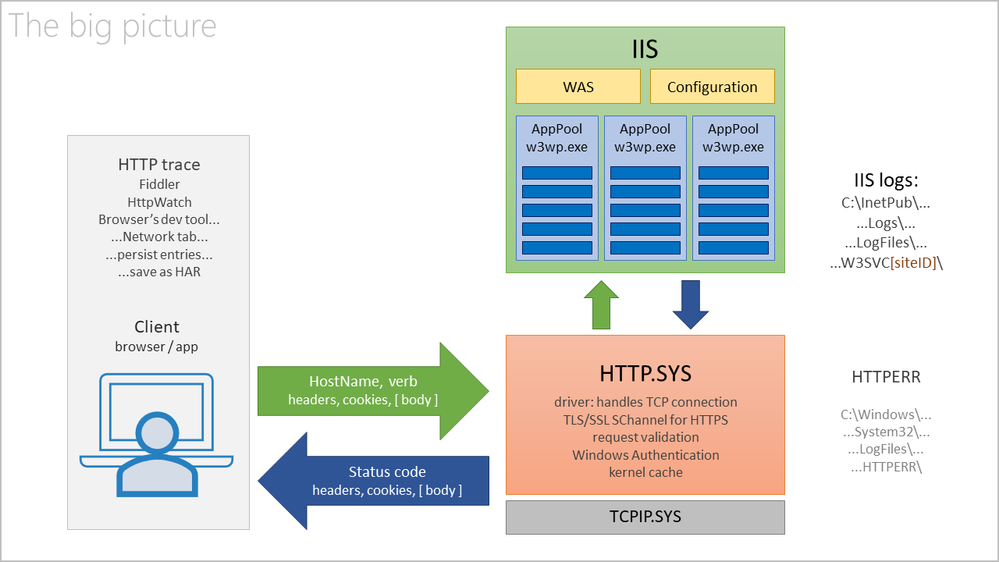

Let’s see the big picture: how an HTTP request travels from the client to IIS, how the response is generated, and what traces we look at, or collect, for diagnosing.

This article also explains why we need to collect the basic IIS troubleshooting files as per http://linqto.me/iis-basic-files. And what we hope to find there, before digging really deep.

In short, we look at:

- HTTP traces from client side

- The HTTPERR logs of the HTTP.SYS driver

- IIS logs and, if collected, Failed Request Traces, aka FREB logs

- Windows Events from Event Viewer, System (Source=WAS) and Application logs

- Ultimately, we collect memory dumps from IIS worker processes, w3wp.exe

HTTP traces from client side

Why?

The issue is not always obvious. There is no error page displayed by the browser, for example. It may be just a freeze, because AJAX calls are failing. Sometimes the client is not even a browser. Or the client may not event be able to contact the server at all, such as when the SChannel used by HTTPS is failing.

More often than not, we need to see what the client is sending, what it receives if anything, in what sequence etc.

How?

Use Fiddler, HTTPWatch, or at least the network tab in the browser’s developer tools. For the last option, don’t forget to preserve logs, in case requests are deleted from a page navigation to the next. Of course, you should be reproducing the issue while collecting the trace.

If using Fiddler for HTTPS traffic, don’t forget to decrypt before sharing with others.

More:

The article at http://linqto.me/http-trace details on collecting traces.

Wikipedia documents well the HTTP response status codes and so does the Mozilla developer site, just in case you want to interpret these in the HTTP trace.

HTTP.SYS logs

Why?

We tend to forget there are elements between the client and IIS. The web server stands on top of HTTP.SYS driver. Requests from clients have to pass through HTTP.SYS, which handles the TCP connections, before actually getting to IIS web server.

HTTP.SYS also establishes the SSL/TLS SChannel used in HTTPS, the Secure Channel tunnel; see http://linqto.me/https. Request validation happens in HTTP.SYS; for instance, too large an HTTP request header may cause rejection. And with certain configurations, HTTP.SYS performs the Windows Authentication of HTTP requests with Kerberos. All these happen before relaying requests to IIS; any failure here would prevent an HTTP request to reach IIS.

If requests are not getting to IIS, a visit to these HTTP.SYS logs may reveal why.

How?

Well, this is simple. Simply go and see the logs at the following path:

C:\Windows\System32\LogFiles\HTTPERR\

On a side note, that path can be customized, configured within the Registry. The following key would reveal it:

HKLM:\SYSTEM\CurrentControlSet\Services\HTTP\Parameters\

More:

The reference from http://linqto.me/httpsys helps explaining entries found in HTTP.SYS logs.

Applications that rely on the HTTP.SYS, like IIS itself, need to configure the driver. A few settings may be seen at http://linqto.me/httpsysconfig. For example, when you set a certificate for an HTTPS binding in IIS Manager, that setting is going down to HTTP.SYS, because IIS itself does not handle HTTPS; it relies on HTTP.SYS for that.

Some HTTP.SYS driver configuration can be consulted with netsh. Try the following, for a starter:

netsh http show help

IIS logs

Why?

Looking in the IIS logs we can confirm that requests are getting from clients to IIS in the first place. Further on, using tools like Log Parser and Log Parser Studio we can query and analyze the logs to keep track of the overall server health or isolate certain requests for problem reproducing. We can discover patterns or figures – such as average time-taken – that would help with further investigation steps.

We get some extra information here. From the client side, we may see the HTTP response status codes; but IIS logs will also show sub-status codes and Win32 processing status - these may reveal more about what’s going wrong. Sub-status codes and Win32 processing status codes are not sent to client.

How?

The default IIS logs location is like the following. The actual path can be customized in the IIS Manager for a site, selecting the Logging feature.

C:\inetpub\logs\LogFiles\W3SVC[siteID]\

Find the SiteID selecting the Sites node in IIS Manager, or looking into the applicationHost.config.

More:

The reference of the HTTP response status codes sent by IIS can be found at http://linqto.me/http-response-codes. Generally, a response with status and sub-status 500.0 means error(s) in the application being executed by IIS.

Keep in mind that IIS log entries have the time in UTC and the response time-taken generally includes the network transfer time: from the first byte of the request, to the last byte of the response.

As for using the Log Parser and Log Parser Studio for analyzing the logs, there are resources helping you get started, such as LogParserPlus.com or this blog post.

Windows Event Viewer entries

Why?

While HTTP traces, IIS logs and HTTP.SYS may show us the overall info about requests and responses, helping us isolate, we still know little about how the request processing went.

Windows Event Viewer is the next stop, a reliable place where problems in the IIS processes (or the applications they execute) leave traces.

How?

System log

WAS, Windows Activation Service, is the IIS component that creates and monitors IIS w3wp.exe worker processes, for executing the apps in the application pools. WAS logs its activity and issues in the System log.

Filtering this log with Source=WAS, we get an overview about IIS application pool processes. Do they stop responding? Or do they crash? And if yes how often?

Note that the SChannel provider, used by the HTTP.SYS for the HTTPS communication, is also reporting issues in this events log.

Application log

Let’s remember that the w3wp.exe IIS processes are executing web applications, our code. To do that, w3wp.exe loads the .NET framework and the Asp.Net. If these processes or applications encounter problems, they are leaving traces in the Application log.

If an exception occurs in our application, and our code is not handling it, the Asp.Net framework will try its best to handle it for us. The exception may result in an error page shown to the application’s user, but at least the execution will continue for the other requests. Such events will be present in the log with something like Source=.NET or Source=Asp.Net. If we’re lucky, we get more than the exception code: we may get the context of the exception, such as the functions call stack or even better, lines of code that leaded to the exception.

This exception handling by Asp.Net avoids a w3wp.exe to crash with unhandled exceptions. But there are contexts when Asp.Net cannot handle the exception, nor did our application’s code. It becomes a second-chance exception. The OS will handle it its way: terminate the process to avoid any worse things to happen. In such rare instance, we would see a Windows event in the Application log with the Source=Application, mentioning the w3wp.exe as a process, with its PID, and the exception code.

More:

Keep in mind that some other relevant events are not collected by default. One example: CAPI events, Cryptographic API. The CAPI is used by the SChannel provider when HTTP.SYS establishes HTTPS communication; see http://linqto.me/https. So, investigating HTTPS issues might lead to enabling CAPI logging.

Note: Unlike the IIS logs, the Windows Event Viewer is showing event time in local system’s time-zone, not in UTC. Only the Details XML view is showing the UTC timestamp.

FREBs, Failed Request Traces

Why?

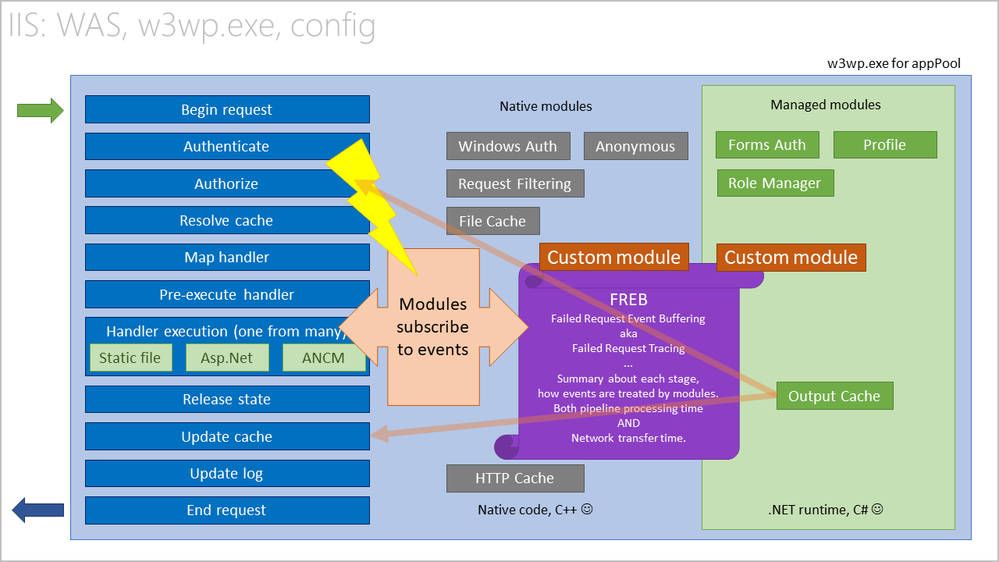

If we’re still on our trail to hunt down the problem cause, let’s look inside IIS, how it processes requests in those w3wp.exe instances.

To generate a response, requests are processed in a pipeline. At each step in the pipeline, notifications are sent to various modules that are loaded inside w3wp.exe. The IIS native or custom modules are subscribing to these notifications, and they may work on the request as it travels through the pipeline.

Depending on its configuration, a module may do something or nothing on the request, but it will surely report events or its activity. FREB, Failed Request Event Buffering, can capture these events and persist them in log files.

These logs show what happens at stages in pipeline, how long does it take for a response to be sent.

How?

The Failed Request Tracing is an optional IIS feature. We need to make sure it is installed, to capture FREB logs.

To collect, we add rules telling in what conditions a trace should be taken. Then we enable tracing for a specific site.

Of course, trace collection should happen while the problem is reproduced, to capture the conditions causing it.

Default location of FREB trace log files is below:

C:\inetpub\logs\FailedReqLogFiles\W3SVC[siteID]\

Find the SiteID selecting the Sites node in IIS Manager, or looking into the applicationHost.config.

More:

Keep in mind that tracing does incur a minor performance penalty; after we collect data, we should disable it.

A FREB only collects a (configurable) number of trace logs, and these usually take less than 1 MB each; disk space should not be an issue.

On the IIS documentation site there are a couple of articles on using FREB to troubleshoot issues.

The FREB logs are XML files that should be opened with Internet Explorer. Make sure that the freb.xsl file generated with the trace collection is in the same directory. My article at http://linqto.me/reading-freb details how these traces can be looked at.

If we see a response status code of 500.0 or an error generated with the handler, it means an exception in the executed application. The handler is the one that generates the response for the client; usually, it is our code that is executed by IIS.

Collect and analyze memory dumps

Why?

There are cases when the root cause of the issue is deeply buried in the code executed by the .NET framework, inside the w3wp.exe. Threads executing our code to generate the responses encounter exceptions, or they get blocked. Their behavior is either caused by the execution path, by the application’s objects in memory, or by certain conditions. Suffice to say that there are cases when we need to look deep into the process memory for loaded modules, call stacks, objects etc. We need memory dumps for post-mortem analysis.

How?

Dump analysis for debugging is expensive – it requires expertise to do the debugging. In addition, the dump collection itself needs to be carefully planned, otherwise the collected dump(s) may not capture the exact context with the root cause.

First of all, capture a FULL memory dump. We need to have access to all the committed memory of the process to see the objects.

Secondly, capture several dumps, not just one. It is always better to have too much data and discard than having insufficient data.

The process dump is like a photo, a snapshot of the memory. A dump collected a split second too late will show call stacks that are not capturing the exception context. Or maybe objects are no longer in the memory due to garbage collection.

A single dump can only show one point in time. A series of dumps from the same process instance – same PID, process ID – can show trends: how things are unfolding, if threads remain blocked, how memory heaps are evolving etc.

Take at least 3 to 5 dumps; the more, the better. But if it’s not from the same PID, remember that new PID means a totally new memory space; all objects and threads are lost with a killed process.

Allow 5 to 10 seconds between consecutive dumps, if we’re studying performance issued. Allow the committed memory to grow between dumps if we’re studying memory leaks. Some 300 MB, 500 MB or 1 GB – whatever is relevant as growth for a certain period.

Read my article at http://linqto.me/dumps if you're not familiar with exception handling in Windows and IIS; it also details how dumps can be collected to study these exceptions or performance issues.

Tooling for dump collection

Now, you cannot stay and watch over the w3wp.exe to see when the exception occurs. And performance issues are not always easily reproducible, or we can’t be around to witness. So, we rely on automated tools that are attaching to w3wp.exe process instances and are monitoring those for the occurrences of the investigated issue. When it happens, the dump is collected and saved to disk.

One such tool is called Debug Diagnostics. We need to download Debug Diagnostic and install at least the dump collection component on the IIS machine. DebugDiag has intuitive UI and can monitor all w3wp.exe processes created for a specific application pool:

- https://www.microsoft.com/en-us/download/details.aspx?id=49924 v2.2 (if 32-bit system)

- https://www.microsoft.com/en-us/download/details.aspx?id=102635 v2.3.1 (only supports 64-bit OS)

The other tool is ProcDump, having similar capabilities as DebugDiag, but requiring no installation. It is a command-line tool, so it can be used in environments with no UI, such as Windows Server Core installations or Azure App Services.

Tooling for dump analysis

The Debug Diagnostics mentioned above has an Analysis component. It allows for automated analysis on collected dumps, which may reveal a host of common issues usually found in IIS and other types of processes. And even with less common issues, it may show valuable hints on what is causing the problem, such as threads stuck waiting on database or network calls.

When the automated analysis performed by DebugDiag is not enough, we must switch to “manual” analysis. This is performed with WinDbg. But using it requires some experience, knowledge on inner workings of a process and memory, and spending some time accommodating with the debugger commands.

More:

All the tools mentioned here are free from Microsoft, well documented. And they have been around for quite some time, so we can find a lot of additional resources on the internet on how to use them, including on this blog. For instance, for setting up DebugDiag Collection with rules on taking dumps...

- When exceptions are thrown by execution threads in a process;

- For crashing, or when the worker process is forcibly shut-down;

- For performance issues, when requests are being served too slow, or too much CPU is being used;

- To detect memory leaks, when the process is consuming increasingly more memory.

Both DebugDiag Collection and ProcDump act like debuggers. They attach to a process and it monitor it. Under certain configurable conditions, a memory dump of the process is... dumped on the disk.

A debugger takes precedence and is notified with all exception triggered by a process code, handled or not. Well, an unhandled exception becomes a second-chance one, causing process to crash (to be terminated by the OS). While attached to a process, DebugDiag and ProcDump will log all exceptions from that process and could also take memory dumps. The exception logs may be useful for the developers or sysadmins, that could take measures to fix or avoid.

Finally

The above diagnosing data collection process does not cover all scenarios. Sometimes performance monitoring is used, or perhaps .NET execution tracing is a better option. The investigation can take on other roads...

However, the steps here cover a large range of issue investigations; they constitute a pattern that applies in many of the cases.

All the best of luck!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.